AWS technical architecture for a SaaS

Last updated

The amount of different services available in AWS (or any of the other big cloud providers) can be overwhelming if you're just trying to build a side project. But, if you're like me and you like to try and learn, this post might be useful for you. I recently build a SaaS project and hosted all its components in AWS. In this article, I'll explain all the AWS services I used and how they are combined.

Project details

The project is a web application (with SSL enabled for HTTPS traffic) that has some public pages and a private dashboard that only logged users can access. The public pages have a few forms (contact, subscribe) that interact with back-end services to send emails or save data in MongoDB.

The private dashboard interacts with a back-end server that exposes an API and manages data stored in a MongoDB. In addition, the back-end server runs multiple cron jobs for data reconciliation, email notifications etc.

Both the web app and the back-end server are configured to automatically deploy new releases.

Oh, I almost forgot! There is a Twitter bot that automatically posts random tweets regularly based on data from MongoDB.

As you can see, it has a little bit of everything and most pieces are coupled together, like the web app and the API, or the Twitter bot and the MongoDB while others are independent.

Database

I didn't want to manage a database server myself so I opted for Mongo Atlas. I have some free credits and they even offer a free tier with up to 512MB of storage, which is enough for testing or even small apps. Spinning up a cluster is super easy and, once done, I just needed to configure different users with different permissions for what I need. So that was the easiest part I'd say 😄

Web and API code build and runtime

I'm not going to get into the details of how I built this, but just for reference, my web app was built with Vue.js and the API with Strapi, a Node.js headless CMS.

To run both, decided I wanted to build the web app and the API as docker images and run them with docker-compose. The web app base image is nginx:stable-alpine as it will serve a static Vue.js app and the API base image is node:12-alpine.

Whenever I have a new release ready, I run a simple bash script that builds the image and pushes it to DockerHub:

#!/bin/bash

echo "🧪🧪 Running unit tests for WEB"

NODE_ENV=production npm run test:unit

echo "🎉🎉 Unit tests ok "

echo "🚧🚧 Creating local build for WEB"

npm run build

echo "🎉🎉 Local build successfully created!"

echo "🐬🐬 Creating new version of Docker image"

# Docker build and tag image as latest

sudo docker build --rm -f "Dockerfile" -t DOCKERHUB_USER/REPO:webapp-latest "."

echo "🎉🎉 Image built and tagged"

# Push image to DockerHub

docker push DOCKERHUB_USER/REPO:webapp-latest

echo "🎉🎉 Image pushed to registry"

My docker-compose file to run both containers together looks like this:

version: '3'

services:

app-api:

container_name: app-api

image: DOCKERHUB_USER/REPO:api-latest

environment:

NODE_ENV: production

ports:

- '1337:1337'

web-app:

container_name: web-app

depends_on:

- app-api

image: DOCKERHUB_USER/REPO:webapp-latest

ports:

- '80:80'

As you can see, each container runs on a different port (API on 1337 and web on 80). This is important as we'll have to deal with the ports on the next step.

Networking on AWS

I'd say networking has been the most complex topic on all of this, probably because it's not my strongest suit. It involves multiple AWS services and the domain registrar, in my case NameCheap. I'll have different domains and subdomains:

- mydomain.app : this domain will open the web app.

- api.mydomain.app : domain for the API

- hooks.mydomain.com : domain for webhooks server

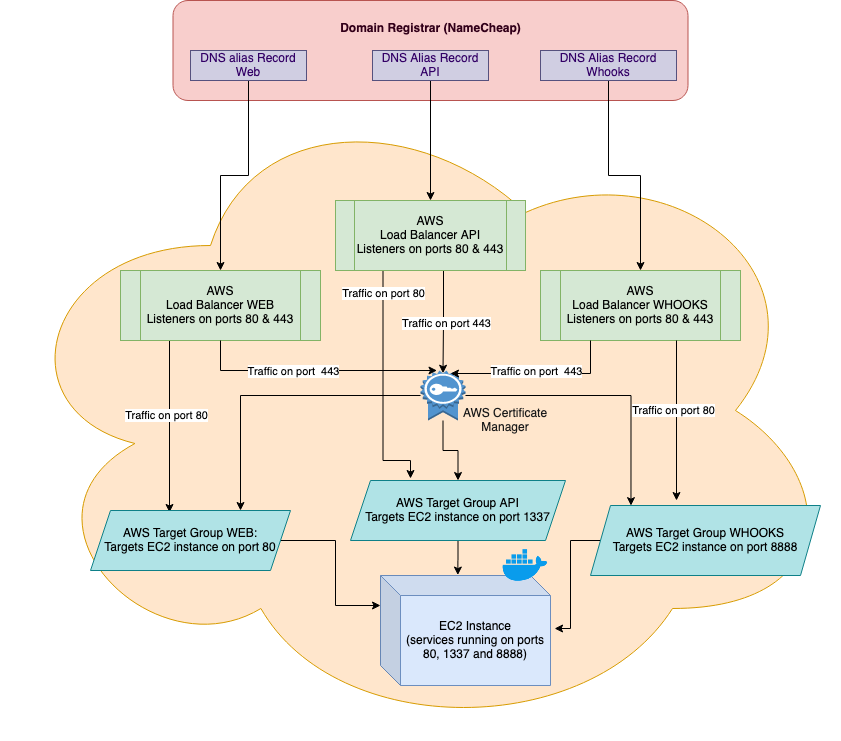

To manage all this in AWS, I'd need to create load balancers, target groups, an SSL certificate and an EC2 instance where my docker containers will actually run. Here is a diagram of all the components.

Let me dive deep into each piece and explain how they work together from top to bottom:

-

Domain Registrar: this is where I bought my domain. I can create DNS records that will indicate where the traffic should be routed. As mentioned earlier, I'll have a root domain for the web and subdomains for my API and webhook server, so I'll create alias records for each and point them to the IP addresses of each of my load balancers.

-

AWS Load Balancers: I have one load balancer for each subdomain and each load balancer has two listeners, one on port 80 for HTTP traffic, and another one on port 443 for HTTPS traffic.

-

AWS Certificate Manager: In order to create the HTTPS listeners in my load balancers I had to generate an SSL certificate.

-

AWS Target Groups: When a listener receives traffic they forward the traffic to a Target Group, which, as they indicate, target an EC2 instance in a specific port. In these target groups, you can configure health checks.

-

EC2 Instance: finally, the server running on Ubuntu. It has Docker compose running the web app and the API, plus my webhooks server running with PM2 (a Node.js process manager).

These are all the pieces I needed to run the private part of the website, however, as I mentioned earlier, the forms in the public pages run on a different back-end.

AWS Lambda Functions and Gateway API

I decided to separate public and private traffic on different back-ends as I didn't want one to impact the other. The API for the private part was going to run on an EC2 instance but I decided to use AWS Cloud functions for the public.

I created different Lambda functions for each functionality needed, like saving subscribers in MongoDB or sending an email when a user leaves a message in the contact form or validating recaptcha forms. The trigger for all these functions is an HTTP request and to manage these requests, I had to create an API in the AWS Gateway API. You can find more details about how to create these in this article in my blog.

Finally, the Twitter bot is just another Lambda function that runs on a schedule instead of being triggered via HTTP. It queries a collection in MongoDB and extracts a random document to build the tweet. Then it uses the Twitter API to post the tweet. It's pretty simple, but it just works 😎.

Conclusion

I hope you find this useful. To be honest, I've written this article because I wanted to have all my notes about everything I've learnt while building this project in the same place 😅. I've probably missed a few details so, if you are building a similar project and need some help, feel free to reach me on Twitter @uf4no.

Oh, I almos forgot, the project I'm talking about is theLIFEBOARD, a weekly planner that helps people achieve their life goals and create new habits. Check it out and let me know what you think!

If you enjoyed this article consider sharing it on social media or buying me a coffee ✌️

Oh! and don't forget to follow me on Twitter where I share tons of dev tips 🤙

Other articles that might help you

my projects

Apart from writing articles in this blog, I spent most of my time working on my personal projects.

theLIFEBOARD.app

theLIFEBOARD is a weekly planner that helps people achieve their goals, create new habits and avoid burnout. It encourages you to plan and review each week so you can easily identify ways to improve your productivity while keeping track of your progress.

Sign upSolidityTips.com

I'm very interested in blockchain, smart contracts and all the possiblilities chains like Ethereum can bring to the web. SolidityTips is a blog in which I share everything I learn about Solidity and Web3 development.

Check it out if you want to learn Solidity

Quicktalks.io

Quicktalks is a place where indie hackers, makers, creators and entrepreneurs share their knowledge, ideas, lessons learned, failures and tactics they use to build successfull online products and businesses. It'll contain recorded short interviews with indie makers.

Message me to be part of it